Brian Long Consultancy & Training Services

Ltd.

February 2012

Accompanying source files available through this

download link

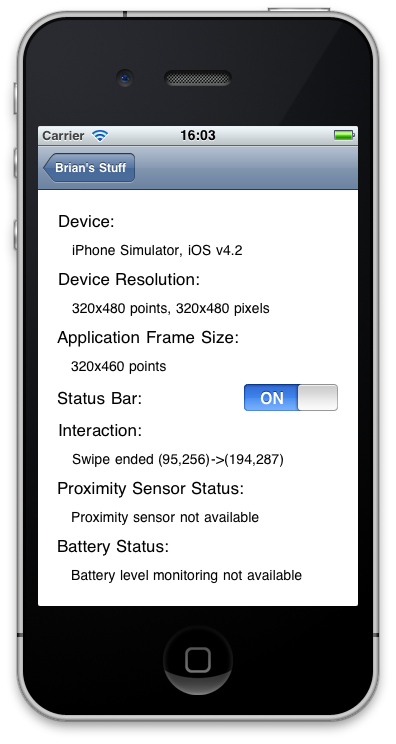

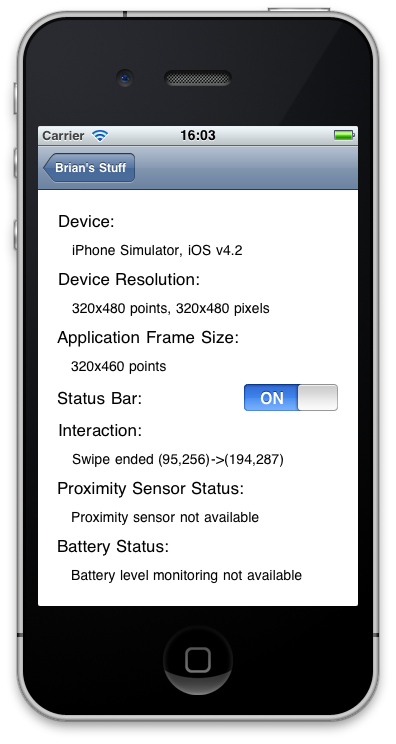

The final label to address in the Info Page relates to interaction and

is designed to give information about taps, swipes, shakes and rotations. The latter

case is straightforward – we’ve already looked at device rotation earlier. We need

to make ShouldAutorotateToInterfaceOrientation() return true

regardless of orientation passed in and then write some code in WillAnimateRotation():

public override void WillAnimateRotation(UIInterfaceOrientation toInterfaceOrientation, double duration)

{

switch (toInterfaceOrientation)

{

case UIInterfaceOrientation.Portrait:

interactionLabel.Text = "iPhone is oriented normally";

break;

case UIInterfaceOrientation.LandscapeLeft:

interactionLabel.Text = "iPhone has been rotated right";

break;

case UIInterfaceOrientation.PortraitUpsideDown:

interactionLabel.Text = "iPhone is upside down";

break;

case UIInterfaceOrientation.LandscapeRight:

interactionLabel.Text = "iPhone has been rotated left";

break;

}

SetTimerToClearMotionLabel();

UpdateUIMetrics();

}

Note: since we are not actually reorganizing the UI and are just responding to altered rotation, we might actually be better to opt for use of the notification center and register an observer to be called in response to the orientation change notification.

NSNotificationCenter.DefaultCenter.AddObserver(UIDevice.OrientationDidChangeNotification,

OrientationChanged);

...

private void OrientationChanged(NSNotification notification)

{

switch (InterfaceOrientation)

{

case UIInterfaceOrientation.Portrait:

interactionLabel.Text = "iPhone is oriented normally";

break;

case UIInterfaceOrientation.LandscapeLeft:

interactionLabel.Text = "iPhone has been rotated right";

break;

case UIInterfaceOrientation.PortraitUpsideDown:

interactionLabel.Text = "iPhone is upside down";

break;

case UIInterfaceOrientation.LandscapeRight:

interactionLabel.Text = "iPhone has been rotated left";

break;

}

SetTimerToClearMotionLabel();

UpdateUIMetrics();

}

As any interaction is noticed the code updates the interaction label, but then calls

a helper routine that uses a timer to reset the label text after a short time interval

of three seconds. Also the resolution labels are updated with another call to

UpdateUIMetrics().

NSTimer ClearMotionLabelTimer;

...

private void SetTimerToClearMotionLabel()

{

if (ClearMotionLabelTimer != null)

ClearMotionLabelTimer.Invalidate();

ClearMotionLabelTimer = NSTimer.CreateScheduledTimer(3, () =>

{

interactionLabel.Text = "None";

ClearMotionLabelTimer = null;

});

}

This code should look familiar, as it is very similar to the timer code used for the proximity sensor. In this case, however, we require a one-shot timer to reset the label instead of one that keeps firing, so a scheduled timer is created rather than a scheduled repeating timer.

The iPhone recognizes when you shake it (if the application chooses to enable this). Standard use of this feature is for undo/redo but clearly this is down to the imagination of the programmer.

But how do you enable shake recognition in the first place? Well a few things need

to be done. It is usually considered that the shake gesture is directed at the view

but we can pick it up in our view controller if we configure it correctly. We need

to have the view controller assert that it can successfully become a first responder

and follow that up by making it so. Then we need to enable shake support and finally

we need to override the MotionEnded method where shake detection can

take place.

By default a view controller’s CanBecomeFirstResponder() property returns

false, rather stymieing our efforts to detecting shakes. However, as

luck would have it the getter for this property was declared virtual and so can

be overridden.

public override bool CanBecomeFirstResponder

{

get { return true; }

}

With calls to BecomeFirstResponder() and ResignFirstResponder()

added to ViewDidAppear() and ViewDidDisappear() respectively,

that covers the first step. To enable shake support, add this to ViewDidAppear():

UIApplication.SharedApplication.ApplicationSupportsShakeToEdit = true;

That leaves the last step of actually responding to a detected shake:

public override void MotionEnded(UIEventSubtype motion, UIEvent evt)

{

if (motion == UIEventSubtype.MotionShake)

{

interactionLabel.Text = "iPhone was shaken";

SetTimerToClearMotionLabel();

}

}

If you were using the more common intent of a shake meaning undo or redo, then you

would need to look into the NSUndoManager class to help implement this.

Responding to the user tapping on the screen can be done in

a few ways. In this application we’ll take the approach of overriding the TouchesBegan(),

TouchesMoved() and TouchesEnded() methods to report what

is going on. This will allow taps, double-taps and multi-taps to be detected and,

potentially, swipe gestures also (in general for recognizing gestures, as opposed

to simple taps, you would be well advised to look into gesture recognizers

that take care of the hard work of tracking the sequence of coordinates for you).

TouchesCancelled() finishes the set of virtual methods that support

touch operations and is useful in that it informs you when a touch operation ends

abruptly due to something like a low memory condition.

using System.Drawing;

...

private PointF StartCoord;

...

private string DescribeTouch(UITouch touch)

{

string desc;

switch (touch.TapCount)

{

case 0:

desc = "Swipe";

break;

case 1:

desc = "Single tap";

break;

case 2:

desc = "Double tap";

break;

default:

desc = "Multiple tap";

break;

}

switch (touch.Phase)

{

case UITouchPhase.Began:

desc += " started";

break;

case UITouchPhase.Moved:

desc += " moved";

break;

case UITouchPhase.Stationary:

desc += " hasn't moved";

break;

case UITouchPhase.Ended:

desc += " ended";

break;

case UITouchPhase.Cancelled:

desc += " cancelled";

break;

}

return desc;

}

public override void TouchesBegan(NSSet touches, UIEvent evt)

{

var touchArray = touches.ToArray<UITouch>();

if (touches.Count > 0)

{

var coord = touchArray[0].LocationInView(touchArray[0].View);

if (touchArray[0].TapCount < 2)

StartCoord = coord;

interactionLabel.Text = string.Format("{0} ({1},{2})",

DescribeTouch(touchArray[0]), coord.X, coord.Y);

}

}

public override void TouchesMoved(NSSet touches, UIEvent evt)

{

var touchArray = touches.ToArray<UITouch>();

if (touches.Count > 0)

{

var coord = touchArray[0].LocationInView(touchArray[0].View);

interactionLabel.Text = string.Format("{0} ({1},{2})",

DescribeTouch(touchArray[0]), coord.X, coord.Y);

}

}

public override void TouchesEnded(NSSet touches, UIEvent evt)

{

var touchArray = touches.ToArray<UITouch>();

if (touches.Count > 0)

{

var coord = touchArray[0].LocationInView(touchArray[0].View);

interactionLabel.Text = string.Format("{0} ({1},{2})->({3},{4})",

DescribeTouch(touchArray[0]), StartCoord.X, StartCoord.Y, coord.X, coord.Y);

SetTimerToClearMotionLabel();

}

}

These methods are passed a set of UITouch objects and can potentially

support multi-touch operations. In the program as it stands this will not happen,

as multi-touch has not been enabled, and so touchArray in each case

will hold a single UITouch object. Notice that the first tap coordinate

is recorded into StartCoord, declared in the view controller class,

allowing the message describing a double-tap or swipe to include this starting coordinate

as well as the ending coordinate, as shown in the screenshot below.

If you need to support multi-touch in your iPhone application then you’ll need to

add this to one of your start-up methods, such as ViewDidLoad() or

Initialize():

View.MultipleTouchEnabled = true;

To simulate multi-touch in the iPhone Simulator, hold down the Option

(or Alt) key, though remember this application only looks for single

touches.

Go back to the top of this page

Go back to start of this article